A Year with Cachex in Production

A little over a year ago I began working on what would become my first real project in Elixir. Without going into too much detail, it was a re-imagination of a legacy system designed to handle many incoming data streams quickly and reliably. It seemed like a good fit for Elixir, Erlang and OTP, so I made my case and was given the go ahead to build it as I deemed necessary. As this legacy system was (at the time) implemented in PHP, it was also a good time to explore the improvements we could (and should) make now that we had ways to easily store various types of state in memory.

One such improvement was related to the notion of caching message IDs in this layer, particularly to de-duplicate a high volume of repeated messages coming in (due to things such as client retry, rogue processes, and just general bugs). Due to the wasted effort processing these duplicates further down the pipeline, in what would essentially be a no-op, we decided to try and eliminate these duplicates as they came in (note that we were aiming at improvement, not total de-duplication). Fortunately for us, Erlang ships with a neat memory storage system; Erlang Term Storage (ETS). A term is basically a value inside the Erlang VM, so you can throw whatever you want into ETS and be good to go. It seemed like a good fit, so I planned to write a small layer over ETS as an abstraction so that people new to the codebase wouldn't have to dive straight into an Erlang layer to do anything.

But this is the joy of open source! I went looking to see if someone had already implemented anything similar to what I was wanting, and I stumbled across ConCache. I recalled my previous read through of Elixir in Action a few months prior when I was first exploring Elixir, and realised that the author (Saša Jurić) was also the creator. ConCache looked (and is) great so I pulled it down from Hex and began playing around with it, exploring the features and implementing a couple of prototypes for our internal use cases. Prototypes looked good, but there were certains things missing at that point that I thought would prove useful for us in particular. We needed several things specifically:

- Bounded cache sizes

- Cheap expiration of entries

- Monitoring infrastructure for any caches (hit rates, etc)

- Transactional execution, as needed

At the time, ConCache supported both entry expiration and transactional execution, but didn't have the cache restrictions and monitoring we needed. The other thing playing on my mind was that ConCache was backed by several processes, and so the message passing induced extra overhead and left the potential for bottlenecking. It was at this point that I decided to begin rolling my own library as an alternative. There are many things that people come to expect from a cache, which ETS does not offer out of the box. My intent was (and still is) to create a performant binding, backed by ETS, implementing many common features of some of the caching systems seen in other languages (I come from Java; think Guava).

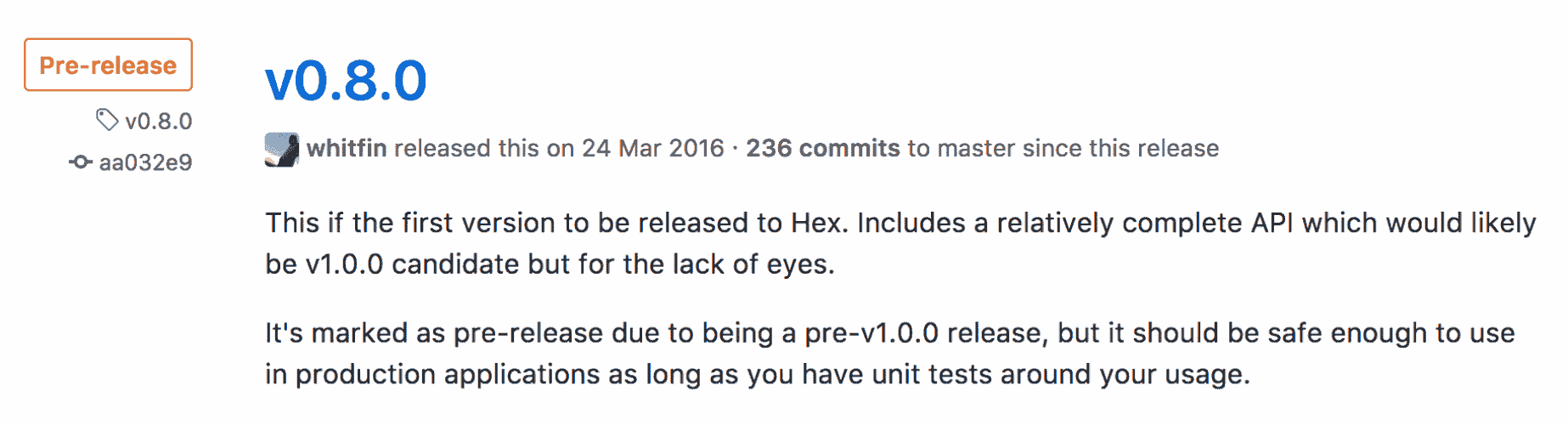

My first "full" implementation took around a month (I was working on it in my day job too, as it was needed for our new project), and it looked pretty good. I pushed it up to GitHub and tagged it as a v0.8, stating that it wasn't yet fully tested and hadn't been used in production, and to be cautious when using it. This implementation was based on use of Mnesia, as we were planning distribution inside our caches to replicate the changes across our cluster (more on this later).

We began to bake it into our project at work, and there were several iterations before tagging a v0.9 which actually did make it to production-like scale. At this point, Cachex had support for monitoring, execution hooks, transactions and expirations. Pretty much the full set of what we wanted, so I was pretty happy with it. However, it soon came to my realisation that building on Mnesia was very likely a mistake - distribution is hard, and it would have been better to delegate that to the user rather than try to bake it in. What's more, Mnesia is far slower than ETS (naturally), and so we began to see some issues with caches not keeping up. I began to focus on performance improvements now that the features were pretty much complete.

In June, a v1.2 was released which included a few optimizations (which I should have done from the beginning) to remove the single GenServer which was bottlenecking us. There was an improvement of roughly 3x when comparing performance against the previous version. This was good, but I felt that there were other places to be trimmed (Mnesia was still in play due to keeping compatibility). It was at this point that we began to move it to production in our system, starting around the end of July in 2016. Releases to Cachex were then synced with production bumps of the system we were working on - it was kept up to date as a guinea pig for the open source world, but it was surprisingly stable. We never actually had a Cachex related issue (in production); everything was either found and fixed in staging, or programmer error (which is amusing, as I wrote the library).

Once v1.2 hit production, more work continued towards a v2.0 where I could "legally" break stuff (hat tip to SemVer). Mnesia was totally stripped out, but thankfully I managed to maintain the same interfaces in almost all cases - a new transaction manager was put in place to cover the cases Mnesia was handling, which turned out to be 5x as fast (combine this with the improvements in v1.2, and this was a big deal). There were also changes to macros rather than function calls in several places, optimized matching, and a bunch of other language-level performance improvements which were becoming more and more noticeable due to the latency narrowing. v2.x also shipped with support for cache bounding via Policies, and implemented the base LRW policy. As this is something I had wanted from the start, it was good to finally get it into Cachex, and this release was the first which allowed me to do so in a performant way due to the removal of Mnesia and the custom transaction manager.

At this point (v2.1), Cachex is extremely fast in comparison to pretty much anything out there, with little overhead against raw ETS. It becomes even faster (by around 0.3 µs/op) if you already have a static Cachex state available to you to call with, rather than just a cache name.

benchmark name iterations average time

size 10000000 0.76 µs/op

empty? 10000000 0.85 µs/op

exists? 10000000 0.90 µs/op

update 10000000 0.99 µs/op

refresh 1000000 1.01 µs/op

get 1000000 1.06 µs/op

take 1000000 1.06 µs/op

expire 1000000 1.06 µs/op

persist 1000000 1.16 µs/op

ttl 1000000 1.16 µs/op

expire_at 1000000 1.17 µs/op

del 1000000 1.20 µs/op

set 1000000 1.28 µs/op

decr 1000000 1.93 µs/op

incr 1000000 2.06 µs/op

get_and_update 500000 5.55 µs/op

count 500000 7.44 µs/op

keys 200000 7.97 µs/op

The benchmarks above are stored in the Cachex repo, and also include options for running with a static state, and with transactional contexts. I still thoroughly recommend benchmarking your specific use cases, so you know how caches perform in your circumstances.

As of July 1st, 2017, with roughly a year in production inside the system referenced above, I do have some numbers for those interested (generated by the hooks placed inside Cachex). These numbers are based on the single cache designed for de-duplication, rather than the entirety of the cache use on the cluster (which is a little more due to some other use cases).

Total operations: 46,250,454,309

Daily operations: 168,183,470

Average key space: 536,613

Going forward I'm scoping out a v3 for Cachex to improve some of the existing interface and some messiness with fallback handling and concurrency. If anyone has any suggestions for improvements, fixes or just general features, I would love to hear feedback! I'm also interested whether there are any others out there using Cachex in production. Feel free to reach out to me in comments, @_whitfin on Twitter, or on the Elixir Slack in #cachex!

As a final note, I just want to point out that ConCache remains a great project and works well for what it's designed for. It's well worth checking out before jumping to Cachex; as always, it depends on your use case!